# a VERY basic list of named elements

car_list <- list(manufacturer = "Honda", vehicle = "Civic", year = 2020)Finding JSON Sources

I’ve covered some strategies for parsing JSON with a few methods in base R and/or tidyverse in a previous blog post. I’d like to go one step up in the chain, and talk about pulling raw data/JSON from sites. While having a direct link to JSON is common, in some situations where you’re scraping JavaScript fed by APIs the raw data source is not always as easy to find.

I have three examples for today:

- FiveThirtyEight 2020 NFL Predictions

- ESPN Win Percentage/play-by-play (embedded JSON)

- ESPN Public API

Web vs Analysis

Most of these JSON data sources are intended to be used with JavaScript methods, and have not been oriented to a “flat” data style. This means the JSON has lots of separations of the data for a specific use/purpose inside the site, and efficient singular representations of each data in JSON storage as opposed to normalized data with repeats in a dataframe. While extreme detail is out of scope for this blogpost, JSON is structured as a “collection of name/value pairs” or a “an ordered list of values”. This means it is typically represented in R as repeated lists of list elements, where the list elements can be named lists, vectors, dataframes, or character strings.

Alternatively typically data for analysis is usually most useful as a normalized rectangle eg a dataframe/tibble. “Under the hood a data frame is a list of equal length vectors” per Advanced R.

One step further is tidy data which is essentially “3rd normal form”. Hadley goes into more detail in his “Tidy Data” publication. The takeaway here is that web designers are optimizing for their extremely focused interactive JavaScript apps and websites, as opposed to novel analyses that we often want to work with. This is often why there are quite a few steps to “rectangle” a JSON.

An aside on Subsetting

Subsetting in R is done many different ways, and Hadley Wickham has an entire chapter dedicated to this in Advanced R. It’s worth reading through that chapter to better understand the nuance, but I’ll provide a very brief summary of the options.

“Subsetting a list works in the same way as subsetting an atomic vector. Using

[always returns a list;[[and$, … let you pull out elements of a list.”

When working with lists, you can typically use $ and [[ interchangeably to extract single list elements by name. [[ requires exact matching whereas $ allows for partial matching, so I typically prefer to use [[. To extract by location from base R you need to use [[.

purrrfunctionspluck()andchuck()implement a generalised form of[[that allow you to index deeply and flexibly into data structures.pluck()consistently returnsNULLwhen an element does not exist,chuck()always throws an error in that case.”

So in short, you can use $, [[ and pluck/chuck in many of the same ways. I’ll compare all the base R and purrr versions below (all should return “Honda”).

# $ subsets by name

car_list$manufacturer[1] "Honda"# notice partial match

car_list$man[1] "Honda"# [[ requires exact match or position

car_list[["manufacturer"]][1] "Honda"car_list[[1]][1] "Honda"# pluck and chuck provide a more strict version of [[

# and can subset by exact name or position

purrr::pluck(car_list, "manufacturer")[1] "Honda"purrr::pluck(car_list, 1)[1] "Honda"purrr::chuck(car_list, "manufacturer")[1] "Honda"purrr::chuck(car_list, 1)[1] "Honda"For one more warning of partial name matching with $, where we now have a case of two elements with similar names see below:

car_list2 <- list(manufacturer = "Honda", vehicle = "Civic", manufactured_year = 2020)

# partial match throws a null

car_list2$manNULL# exact name returns actual elements

car_list2$manufacturer[1] "Honda"An aside on JavaScript

If we dramatically oversimplify JavaScript or their R-based counterparts htmlwidgets, they are a combination of some type of JSON data and then functions to display or interact with that data.

We can quickly show a htmlwidget example via the fantastic reactable R package.

That gives us the power of JavaScript in R! However, what’s going on with this function behind the scenes? We can extract the dataframe that has now been represented as a JSON file from the htmlwidget!

table_data <- table_ex[["x"]][["tag"]][["attribs"]][["data"]]

table_data %>% class()[1] "json"This basic idea, that the data is embedded as JSON to fill the JavaScript app can be further applied to web-based apps! We can use a similar idea to scrape raw JSON or query a web API that returns JSON from a site.

FiveThirtyEight

FiveThirtyEight publishes their ELO ratings and playoff predictions for the NFL via a table at projects.fivethirtyeight.com/2020-nfl-predictions/. They are also kind enough to post this data as a download publicly! However, let’s see if we can “find” the data source feeding the JavaScript table.

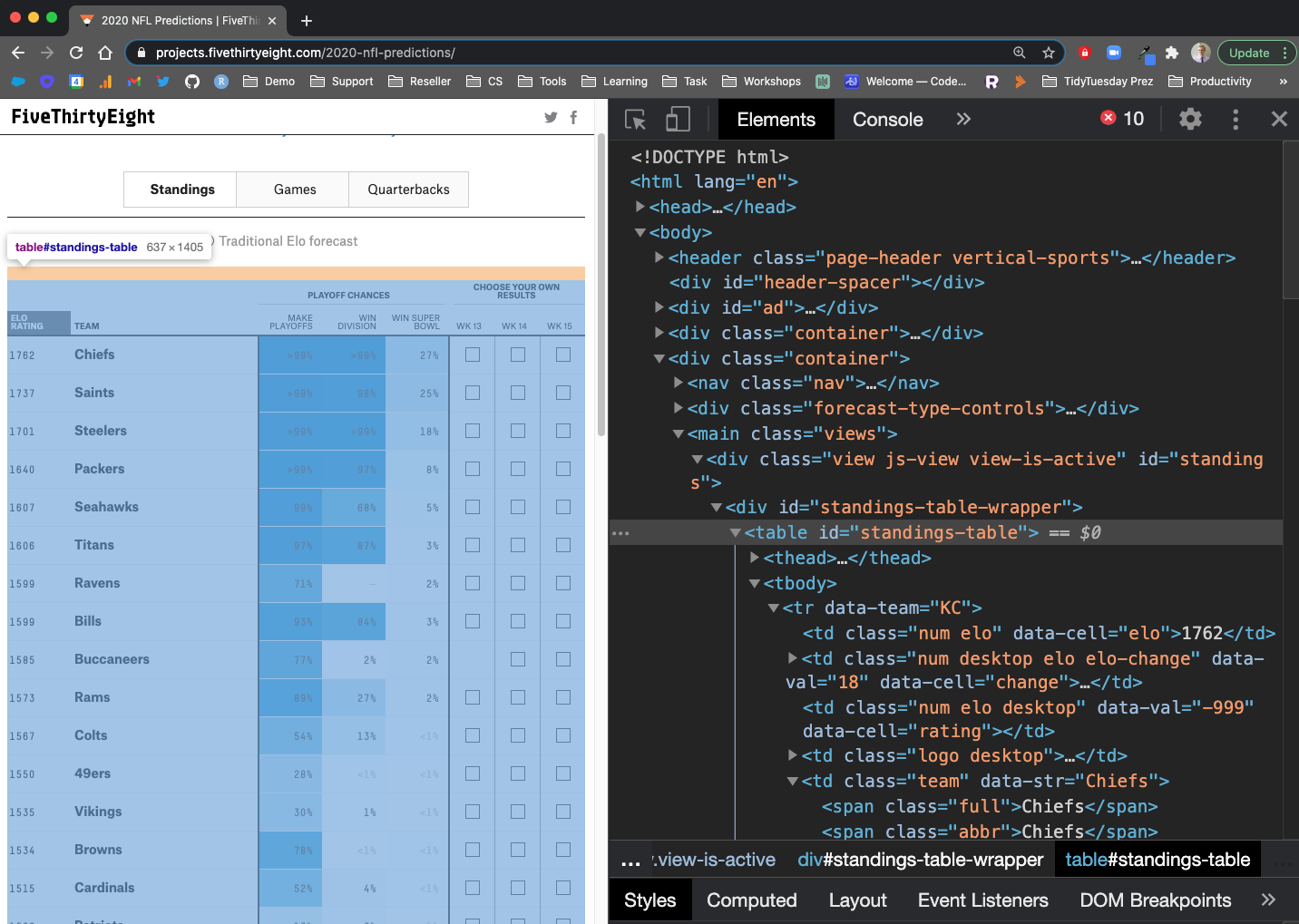

rvest

We can try our classical rvest based approach to scrape the HTML content and get back a table. However, the side effect of this is we’re returning the literal data with units, some combined columns, and other formatting. You’ll notice that all the columns show up as character and this introduces a lot of other work we’d have to do to “clean” the data.

library(xml2)

library(rvest)

url_538 <- "https://projects.fivethirtyeight.com/2020-nfl-predictions/"

raw_538_html <- read_html(url_538)

raw_538_table <- raw_538_html %>%

html_node("#standings-table") %>%

html_table(fill = TRUE) %>%

janitor::clean_names() %>%

tibble()

raw_538_table %>% glimpse()Rows: 36

Columns: 12

$ x <chr> "", "", "", "elo with top qbelo rating", "1734", "17…

$ x_2 <chr> "", "", "", "1-week change", "+34", "", "", "", "", …

$ x_3 <chr> "", "", "", "current qb adj.", "", "", "", "", "", "…

$ x_4 <chr> "", "playoff chances", "", "", "", "", "", "", "", "…

$ x_5 <chr> "playoff chances", "playoff chances", "", "team", "B…

$ x_6 <chr> "playoff chances", "playoff chances", "", "division"…

$ playoff_chances <chr> "playoff chances", "playoff chances", "", "make div.…

$ playoff_chances_2 <chr> "playoff chances", NA, "", "make conf. champ", "✓", …

$ playoff_chances_3 <chr> NA, NA, "", "make super bowl", "✓", "✓", "—", "—", "…

$ playoff_chances_4 <chr> NA, NA, "", "win super bowl", "✓", "—", "—", "—", "—…

$ x_7 <lgl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, …

$ x_8 <lgl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, …Inspect + Network

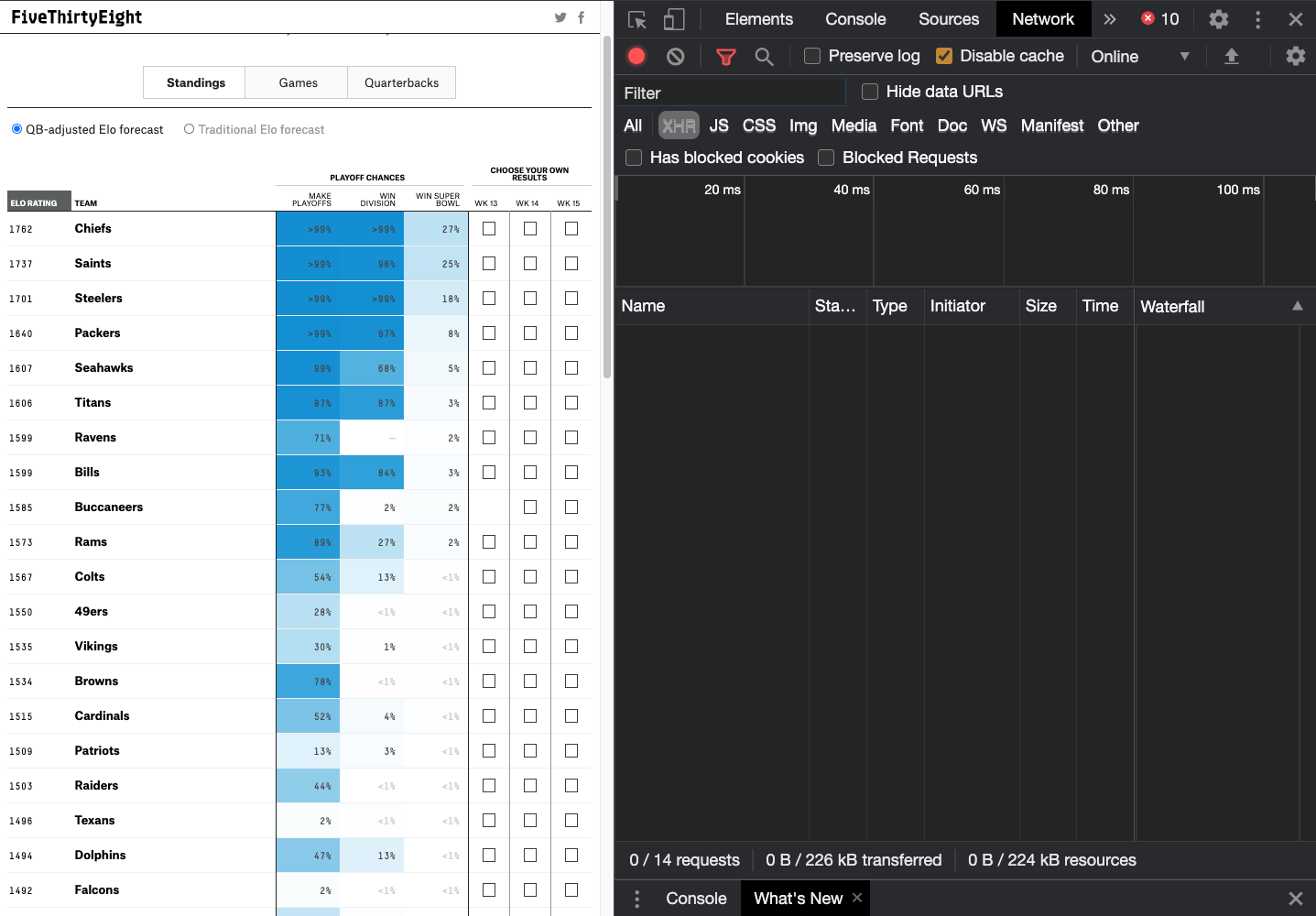

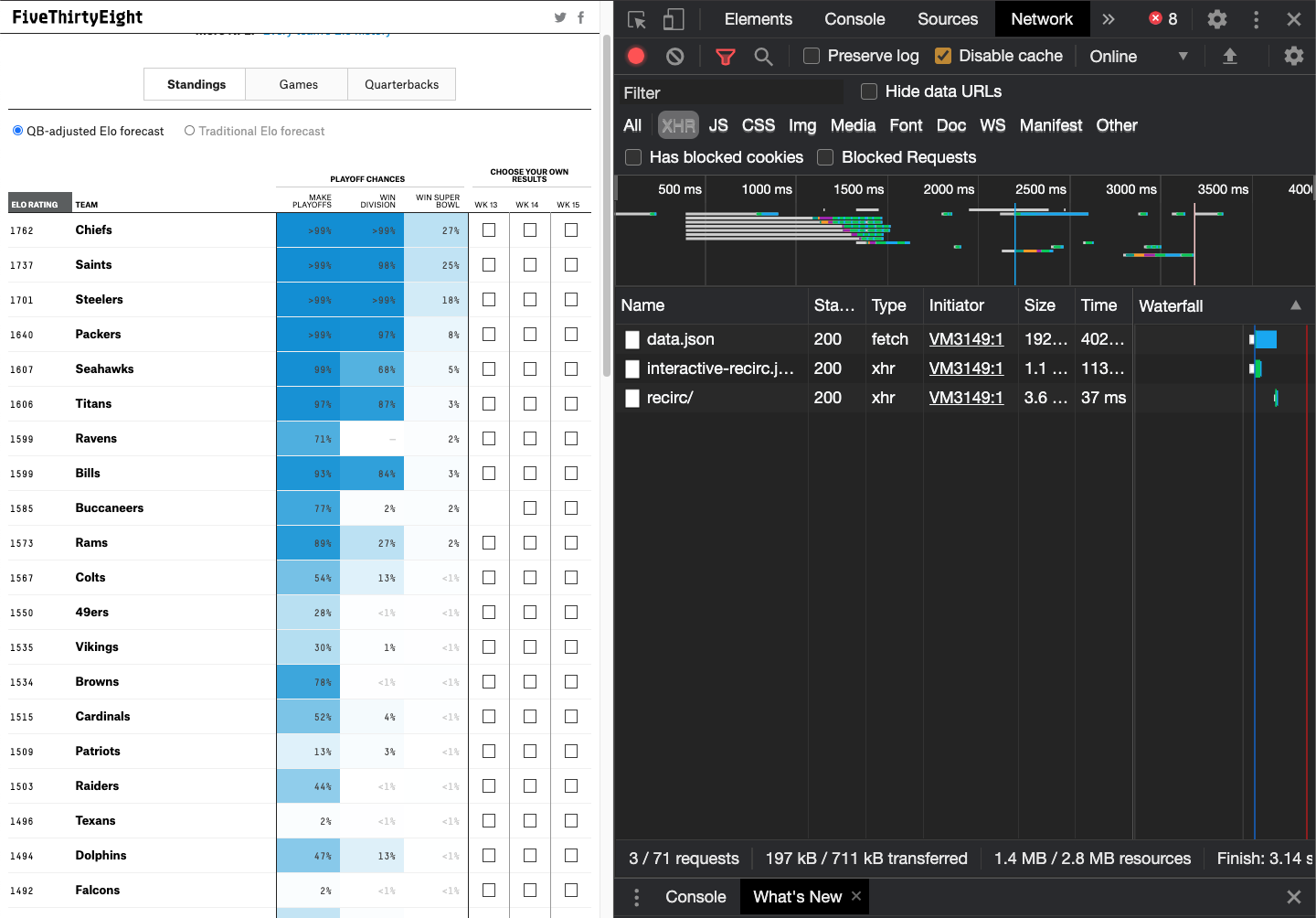

Alternatively, we can Right Click + inspect the site, go to the Network tab, reload the site and see what sources are loaded. Again, FiveThirtyEight is very kind and essentially just loads the JSON as data.json.

I have screenshots below of each item, and the below is a short video of the entire process.

We can click over to the Network Tab after inspecting the site

We need to reload the web page to find sources

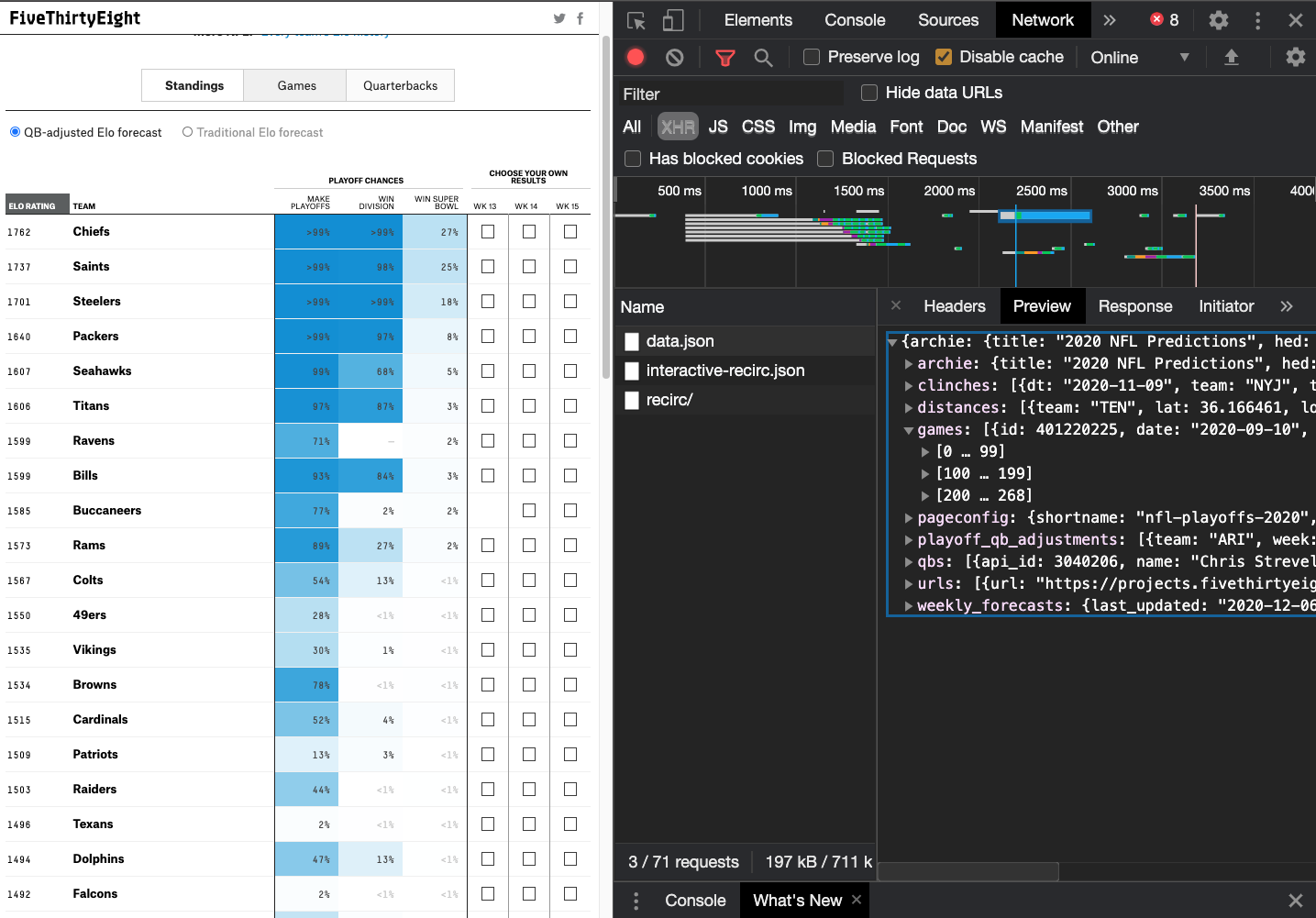

We can examine specific elements by clicking on them, which then shows us JSON!

In our browser inspect, we can see the structure, and that it has some info about games, QBs, and forecasts. This looks like the right dataset! You can right click on data.json and open it in a new page. The url is https://projects.fivethirtyeight.com/2020-nfl-predictions/data.json, and note that we can adjust the year to get older or current data. So https://projects.fivethirtyeight.com/2019-nfl-predictions/data.json returns the data for 2019, and you can go all the way back to 2016! 2015 also exists, but with a different JSON structure, and AFAIK they don’t have data before 2015.

Read the JSON

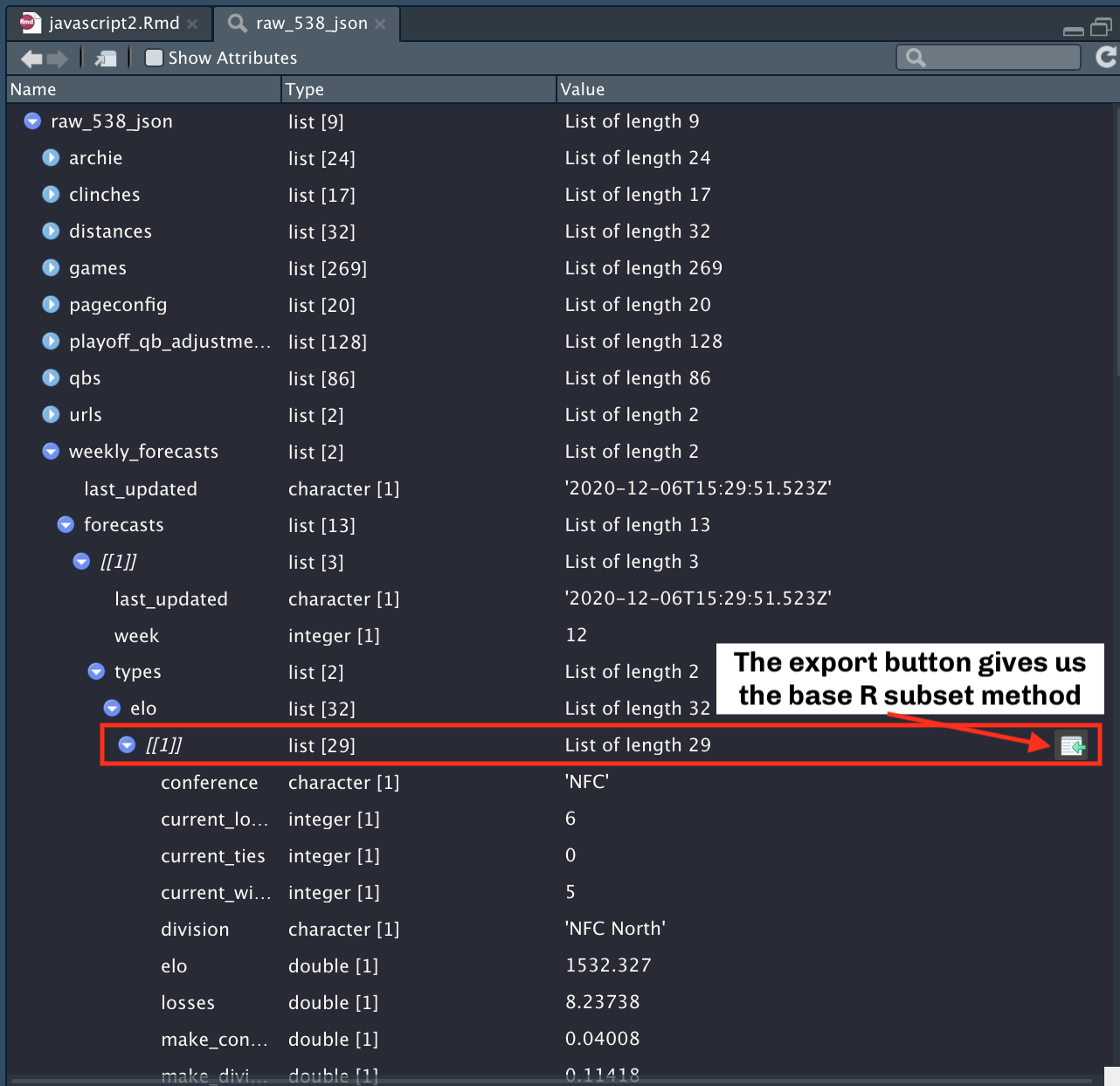

Now that we have a JSON source, we can read it into R with jsonlite. By using the RStudio viewer or listviewer::jsonedit() we can take a look at what the overall structure of the JSON.

library(jsonlite)

raw_538_json <- fromJSON("https://projects.fivethirtyeight.com/2020-nfl-predictions/data.json", simplifyVector = FALSE)

raw_538_json %>% str(max.level = 1)List of 9

$ archie :List of 24

$ clinches :List of 114

$ distances :List of 32

$ games :List of 269

$ pageconfig :List of 20

$ playoff_qb_adjustments:List of 32

$ qbs :List of 87

$ urls :List of 2

$ weekly_forecasts :List of 2Don’t forget that the RStudio Viewer also gives you the ability to export the base R code to access a specific component of the JSON!

Which gives us the following code:

raw_538_json[["weekly_forecasts"]][["forecasts"]][[1]][["types"]][["elo"]][[1]]

ex_538_data <- raw_538_json[["weekly_forecasts"]][["forecasts"]][[1]][["types"]][["elo"]][[1]]

ex_538_data %>% str()List of 29

$ conference : chr "NFC"

$ current_losses : int 9

$ current_ties : int 0

$ current_wins : int 7

$ division : chr "NFC North"

$ elo : num 1489

$ losses : int 9

$ make_conference_champ: int 0

$ make_divisional_round: int 0

$ make_playoffs : int 0

$ make_superbowl : int 0

$ name : chr "MIN"

$ point_diff : int -45

$ points_allowed : int 475

$ points_scored : int 430

$ rating : num 1481

$ rating_current : num 1502

$ rating_top : num 1502

$ seed_1 : int 0

$ seed_2 : int 0

$ seed_3 : int 0

$ seed_4 : int 0

$ seed_5 : int 0

$ seed_6 : int 0

$ seed_7 : int 0

$ ties : int 0

$ win_division : int 0

$ win_superbowl : int 0

$ wins : int 7We can also play around with listviewer.

Since these are unique list elements, we can turn it into a dataframe! This is the current projection for Minnesota.

data.frame(ex_538_data) %>% glimpse()Rows: 1

Columns: 29

$ conference <chr> "NFC"

$ current_losses <int> 9

$ current_ties <int> 0

$ current_wins <int> 7

$ division <chr> "NFC North"

$ elo <dbl> 1489.284

$ losses <int> 9

$ make_conference_champ <int> 0

$ make_divisional_round <int> 0

$ make_playoffs <int> 0

$ make_superbowl <int> 0

$ name <chr> "MIN"

$ point_diff <int> -45

$ points_allowed <int> 475

$ points_scored <int> 430

$ rating <dbl> 1480.954

$ rating_current <dbl> 1501.583

$ rating_top <dbl> 1501.583

$ seed_1 <int> 0

$ seed_2 <int> 0

$ seed_3 <int> 0

$ seed_4 <int> 0

$ seed_5 <int> 0

$ seed_6 <int> 0

$ seed_7 <int> 0

$ ties <int> 0

$ win_division <int> 0

$ win_superbowl <int> 0

$ wins <int> 7Parse the JSON

Ok so we’ve found at least one set of data that is pretty dataframe ready, let’s clean it all up in bulk! I’m most interested in the weekly_forecasts data, so let’s start there.

List of 2

$ last_updated: chr "2021-02-08T03:15:55.357Z"

$ forecasts :List of 22Ok so last_updated is good to know, but not something I need right now. Let’s go one step deeper into forecasts.

List of 22

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3

$ :List of 3Ok now we have a list of 14 lists. This may seem not helpful, BUT remember that as of 2020-12-12, we are in Week 14 of the NFL season! So this is likely 1 list for each of the weekly forecasts, which makes sense as we are in weekly_forecasts$forecasts!

At this point, I think I’m at the right data, so I’m going to take the list and put it in a tibble via tibble::enframe().

raw_538_json$weekly_forecasts$forecasts %>%

enframe()# A tibble: 22 × 2

name value

<int> <list>

1 1 <named list [3]>

2 2 <named list [3]>

3 3 <named list [3]>

4 4 <named list [3]>

5 5 <named list [3]>

6 6 <named list [3]>

7 7 <named list [3]>

8 8 <named list [3]>

9 9 <named list [3]>

10 10 <named list [3]>

# … with 12 more rowsWe need to separate the list items out, so we can try unnest_auto() to see if tidyr can parse the correct structure. Note that unnest_auto works and tells us we could have used unnest_wider().

Using `unnest_wider(value)`; elements have 3 names in common# A tibble: 22 × 4

name last_updated week types

<int> <chr> <int> <list>

1 1 2021-02-08T03:15:55.357Z 21 <named list [2]>

2 2 2021-01-25T03:10:04.809Z 20 <named list [2]>

3 3 2021-01-22T19:28:01.278Z 19 <named list [2]>

4 4 2021-01-15T01:25:55.192Z 18 <named list [2]>

5 5 2021-01-09T16:38:13.126Z 17 <named list [2]>

6 6 2021-01-03T16:03:42.517Z 16 <named list [2]>

7 7 2020-12-24T01:32:05.045Z 15 <named list [2]>

8 8 2020-12-16T14:13:49.344Z 14 <named list [2]>

9 9 2020-12-10T16:21:56.731Z 13 <named list [2]>

10 10 2020-12-06T15:29:51.523Z 12 <named list [2]>

# … with 12 more rowsWe can keep going on the types list column! Note that as unnest_auto() tells us “what” to do, I’m going to replace it with the appropriate function.

raw_538_json$weekly_forecasts$forecasts %>%

enframe() %>%

unnest_wider(value) %>% # changed per recommendation

unnest_auto(types)Using `unnest_wider(types)`; elements have 2 names in common# A tibble: 22 × 5

name last_updated week elo rating

<int> <chr> <int> <list> <list>

1 1 2021-02-08T03:15:55.357Z 21 <list [32]> <list [32]>

2 2 2021-01-25T03:10:04.809Z 20 <list [32]> <list [32]>

3 3 2021-01-22T19:28:01.278Z 19 <list [32]> <list [32]>

4 4 2021-01-15T01:25:55.192Z 18 <list [32]> <list [32]>

5 5 2021-01-09T16:38:13.126Z 17 <list [32]> <list [32]>

6 6 2021-01-03T16:03:42.517Z 16 <list [32]> <list [32]>

7 7 2020-12-24T01:32:05.045Z 15 <list [32]> <list [32]>

8 8 2020-12-16T14:13:49.344Z 14 <list [32]> <list [32]>

9 9 2020-12-10T16:21:56.731Z 13 <list [32]> <list [32]>

10 10 2020-12-06T15:29:51.523Z 12 <list [32]> <list [32]>

# … with 12 more rowsWe now have a list of 32 x 14 weeks. There are 32 teams so we’re most likely at the appropriate depth and can go longer vs wider now. We can also see that name/week don’t align so let’s drop name, and we can use unchop() to increase the length of the data for elo and rating at the same time.

raw_538_json$weekly_forecasts$forecasts %>%

enframe() %>%

unnest_wider(value) %>%

unnest_wider(types) %>% # Changed per recommendation

unchop(cols = c(elo, rating)) %>%

select(-name)# A tibble: 704 × 4

last_updated week elo rating

<chr> <int> <list> <list>

1 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

2 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

3 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

4 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

5 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

6 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

7 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

8 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

9 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

10 2021-02-08T03:15:55.357Z 21 <named list [29]> <named list [29]>

# … with 694 more rowsWe now have 14 weeks x 32 teams (448 rows), along with last_updated, week, elo and rating data. We can use unnest_auto() on the elo column to see what’s the next step. Rating is duplicated so there’s been name repair to avoid duplicated names. We get the following warning that tells us this has occurred.

* rating -> rating...18* rating -> rating...32

You’ll see that I’ve done unnest_auto() on both elo and rating...32 (the renamed rating list column). If you look closely at the names, we can also see that there is duplication of the names for MANY of the columns. A tricky part is that elo/rating each have a LOT of overlap, and are most appropriate as separate data frames that could be stacked if desired.

elo_raw <- raw_538_json$weekly_forecasts$forecasts %>%

enframe() %>%

unnest_wider(value) %>%

unnest_wider(types) %>% # Changed per recommendation

unchop(cols = c(elo, rating)) %>%

select(-name) %>%

unnest_auto(elo) %>%

unnest_auto(rating...32)Using `unnest_wider(elo)`; elements have 29 names in commonNew names:

Using `unnest_wider(rating...32)`; elements have 29 names in common

New names:

• `rating` -> `rating...18`

• `rating` -> `rating...32` [1] "last_updated" "week"

[3] "conference...3" "current_losses...4"

[5] "current_ties...5" "current_wins...6"

[7] "division...7" "elo...8"

[9] "losses...9" "make_conference_champ...10"

[11] "make_divisional_round...11" "make_playoffs...12"

[13] "make_superbowl...13" "name...14"

[15] "point_diff...15" "points_allowed...16"

[17] "points_scored...17" "rating...18"

[19] "rating_current...19" "rating_top...20"

[21] "seed_1...21" "seed_2...22"

[23] "seed_3...23" "seed_4...24"

[25] "seed_5...25" "seed_6...26"

[27] "seed_7...27" "ties...28"

[29] "win_division...29" "win_superbowl...30"

[31] "wins...31" "conference...32"

[33] "current_losses...33" "current_ties...34"

[35] "current_wins...35" "division...36"

[37] "elo...37" "losses...38"

[39] "make_conference_champ...39" "make_divisional_round...40"

[41] "make_playoffs...41" "make_superbowl...42"

[43] "name...43" "point_diff...44"

[45] "points_allowed...45" "points_scored...46"

[47] "rating...47" "rating_current...48"

[49] "rating_top...49" "seed_1...50"

[51] "seed_2...51" "seed_3...52"

[53] "seed_4...53" "seed_5...54"

[55] "seed_6...55" "seed_7...56"

[57] "ties...57" "win_division...58"

[59] "win_superbowl...59" "wins...60" Let’s try this again, with the knowledge that elo and rating should be treated separately for now. Since they have the same names, we can also combine the data by stacking (bind_rows()). I have added a new column so that we can differentiate between the two datasets (ELO vs Rating).

weekly_raw <- raw_538_json$weekly_forecasts$forecasts %>%

enframe() %>%

unnest_wider(value) %>%

unnest_wider(types) %>%

select(-name) %>%

unchop(cols = c(elo, rating))

weekly_elo <- weekly_raw %>%

select(-rating) %>%

unnest_wider(elo) %>%

mutate(measure = "ELO", .after = last_updated)

weekly_rating <- weekly_raw %>%

select(-elo) %>%

unnest_wider(rating) %>%

mutate(measure = "Rating", .after = last_updated)

# confirm same names

all.equal(

names(weekly_elo),

names(weekly_rating)

)[1] TRUEweekly_forecasts <- bind_rows(weekly_elo, weekly_rating)

weekly_forecasts %>% glimpse()Rows: 1,408

Columns: 32

$ last_updated <chr> "2021-02-08T03:15:55.357Z", "2021-02-08T03:15:55…

$ measure <chr> "ELO", "ELO", "ELO", "ELO", "ELO", "ELO", "ELO",…

$ week <int> 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, …

$ conference <chr> "NFC", "AFC", "NFC", "NFC", "NFC", "AFC", "AFC",…

$ current_losses <int> 9, 6, 10, 12, 11, 11, 14, 11, 6, 10, 6, 5, 10, 9…

$ current_ties <int> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ current_wins <int> 7, 10, 7, 4, 5, 4, 2, 5, 12, 6, 11, 13, 6, 7, 15…

$ division <chr> "NFC North", "AFC East", "NFC East", "NFC South"…

$ elo <dbl> 1489.284, 1545.820, 1442.351, 1474.240, 1333.468…

$ losses <dbl> 9, 6, 9, 12, 11, 11, 14, 11, 5, 10, 5, 4, 10, 9,…

$ make_conference_champ <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, …

$ make_divisional_round <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, …

$ make_playoffs <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 1, …

$ make_superbowl <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ name <chr> "MIN", "MIA", "WSH", "ATL", "DET", "CIN", "NYJ",…

$ point_diff <dbl> -45, 66, 6, -18, -142, -113, -214, -123, 165, -7…

$ points_allowed <dbl> 475, 338, 329, 414, 519, 424, 457, 446, 303, 357…

$ points_scored <dbl> 430, 404, 335, 396, 377, 311, 243, 323, 468, 280…

$ rating <dbl> 1480.954, 1565.307, 1446.254, 1449.242, 1340.178…

$ rating_current <dbl> 1501.583, 1529.565, 1462.552, 1448.424, 1338.309…

$ rating_top <dbl> 1501.583, 1529.565, 1462.552, 1448.424, 1338.309…

$ seed_1 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ seed_2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, …

$ seed_3 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, …

$ seed_4 <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, …

$ seed_5 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, …

$ seed_6 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ seed_7 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ ties <dbl> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ win_division <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 1, …

$ win_superbowl <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ wins <dbl> 7, 10, 7, 4, 5, 4, 2, 5, 11, 6, 11, 12, 6, 7, 13…Create a Function

Finally, we can combine the techniques we showed above as a function (and I’ve added it to espnscrapeR). Now we can use this to get data throughout the current season OR get info from past seasons (2015 and beyond). Again, note that 2015 has a different JSON structure but the core forecasts portion is still the same.

get_weekly_forecast <- function(season) {

# Fill URL and read in JSON

raw_url <- glue::glue("https://projects.fivethirtyeight.com/{season}-nfl-predictions/data.json")

raw_json <- fromJSON(raw_url, simplifyVector = FALSE)

# get the two datasets

weekly_raw <- raw_538_json$weekly_forecasts$forecasts %>%

enframe() %>%

unnest_wider(value) %>%

unnest_wider(types) %>%

select(-name) %>%

unchop(cols = c(elo, rating))

# get ELO

weekly_elo <- weekly_raw %>%

select(-rating) %>%

unnest_wider(elo) %>%

mutate(measure = "ELO", .after = last_updated)

# get Rating

weekly_rating <- weekly_raw %>%

select(-elo) %>%

unnest_wider(rating) %>%

mutate(measure = "Rating", .after = last_updated)

# combine

bind_rows(weekly_elo, weekly_rating)

}

get_weekly_forecast(2015) %>%

glimpse()Rows: 1,408

Columns: 32

$ last_updated <chr> "2021-02-08T03:15:55.357Z", "2021-02-08T03:15:55…

$ measure <chr> "ELO", "ELO", "ELO", "ELO", "ELO", "ELO", "ELO",…

$ week <int> 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, 21, …

$ conference <chr> "NFC", "AFC", "NFC", "NFC", "NFC", "AFC", "AFC",…

$ current_losses <int> 9, 6, 10, 12, 11, 11, 14, 11, 6, 10, 6, 5, 10, 9…

$ current_ties <int> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ current_wins <int> 7, 10, 7, 4, 5, 4, 2, 5, 12, 6, 11, 13, 6, 7, 15…

$ division <chr> "NFC North", "AFC East", "NFC East", "NFC South"…

$ elo <dbl> 1489.284, 1545.820, 1442.351, 1474.240, 1333.468…

$ losses <dbl> 9, 6, 9, 12, 11, 11, 14, 11, 5, 10, 5, 4, 10, 9,…

$ make_conference_champ <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, …

$ make_divisional_round <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, …

$ make_playoffs <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 1, …

$ make_superbowl <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ name <chr> "MIN", "MIA", "WSH", "ATL", "DET", "CIN", "NYJ",…

$ point_diff <dbl> -45, 66, 6, -18, -142, -113, -214, -123, 165, -7…

$ points_allowed <dbl> 475, 338, 329, 414, 519, 424, 457, 446, 303, 357…

$ points_scored <dbl> 430, 404, 335, 396, 377, 311, 243, 323, 468, 280…

$ rating <dbl> 1480.954, 1565.307, 1446.254, 1449.242, 1340.178…

$ rating_current <dbl> 1501.583, 1529.565, 1462.552, 1448.424, 1338.309…

$ rating_top <dbl> 1501.583, 1529.565, 1462.552, 1448.424, 1338.309…

$ seed_1 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ seed_2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, …

$ seed_3 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, …

$ seed_4 <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, …

$ seed_5 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, …

$ seed_6 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ seed_7 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ ties <dbl> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ win_division <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 1, …

$ win_superbowl <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ wins <dbl> 7, 10, 7, 4, 5, 4, 2, 5, 11, 6, 11, 12, 6, 7, 13…Other FiveThirtyEight Data

There’s several other interesting data points in this JSON, but they’re also much easier to extract.

QB playoff adjustment values

Output

qb_playoff_adj# A tibble: 32 × 4

name team week qb_adj

<int> <chr> <int> <dbl>

1 1 ARI 21 17.3

2 2 ATL 21 -0.819

3 3 BAL 21 -2.72

4 4 BUF 21 33.1

5 5 CAR 21 4.26

6 6 CHI 21 19.2

7 7 CIN 21 -84.5

8 8 CLE 21 16.9

9 9 DAL 21 -60.9

10 10 DEN 21 15.3

# … with 22 more rowsGames Data

This one is interesting, it’s got ELO change as a result of win/loss along with the spread and ratings.

Using `unnest_wider(value)`; elements have 40 names in commonOutput

games_df# A tibble: 269 × 40

id date datetime week status team1 team2 neutral playoff score1 score2

<int> <chr> <chr> <int> <chr> <chr> <chr> <lgl> <chr> <int> <int>

1 4.01e8 2020… 2020-09… 1 post KC HOU FALSE <NA> 34 20

2 4.01e8 2020… 2020-09… 1 post DET CHI FALSE <NA> 23 27

3 4.01e8 2020… 2020-09… 1 post BAL CLE FALSE <NA> 38 6

4 4.01e8 2020… 2020-09… 1 post MIN GB FALSE <NA> 34 43

5 4.01e8 2020… 2020-09… 1 post JAX IND FALSE <NA> 27 20

6 4.01e8 2020… 2020-09… 1 post NE MIA FALSE <NA> 21 11

7 4.01e8 2020… 2020-09… 1 post BUF NYJ FALSE <NA> 27 17

8 4.01e8 2020… 2020-09… 1 post CAR OAK FALSE <NA> 30 34

9 4.01e8 2020… 2020-09… 1 post WSH PHI FALSE <NA> 27 17

10 4.01e8 2020… 2020-09… 1 post ATL SEA FALSE <NA> 25 38

# … with 259 more rows, and 29 more variables: overtime <lgl>, elo1_pre <dbl>,

# elo2_pre <dbl>, elo_spread <dbl>, elo_prob1 <dbl>, elo_prob2 <dbl>,

# elo1_post <dbl>, elo2_post <dbl>, rating1_pre <dbl>, rating2_pre <dbl>,

# rating_spread <dbl>, rating_prob1 <dbl>, rating_prob2 <dbl>,

# rating1_post <dbl>, rating2_post <dbl>, bettable <lgl>, outcome <dbl>,

# qb_adj1 <dbl>, qb_adj2 <dbl>, rest_adj1 <int>, rest_adj2 <int>,

# dist_adj <dbl>, rating1_top_qb <dbl>, rating2_top_qb <dbl>, …Distances

This data has the distances for each team to other locations/stadiums.

Output

distance_df# A tibble: 1,024 × 6

name team lat lon distances distances_id

<int> <chr> <dbl> <dbl> <dbl> <chr>

1 1 TEN 36.2 -86.8 0 TEN

2 1 TEN 36.2 -86.8 758. NYG

3 1 TEN 36.2 -86.8 471. PIT

4 1 TEN 36.2 -86.8 339. CAR

5 1 TEN 36.2 -86.8 595. BAL

6 1 TEN 36.2 -86.8 619. TB

7 1 TEN 36.2 -86.8 251. IND

8 1 TEN 36.2 -86.8 698. MIN

9 1 TEN 36.2 -86.8 1454. ARI

10 1 TEN 36.2 -86.8 634. DAL

# … with 1,014 more rowsQB Adjustment

I believe this is the in-season QB adjustment for each team.

Output

qb_adj# A tibble: 87 × 6

api_id name team priority elo_value starts

<int> <chr> <chr> <int> <int> <int>

1 3040206 Chris Streveler ARI 2 0 1

2 4035003 Jacob Eason IND 3 58 1

3 3124900 Jake Luton JAX 3 20 1

4 3915436 Steven Montez WSH 3 0 1

5 4241479 Tua Tagovailoa MIA 1 128 1

6 4038941 Justin Herbert LAC 1 200 1

7 4040715 Jalen Hurts PHI 1 120 1

8 3895785 Ben DiNucci DAL 3 6 1

9 4036378 Jordan Love GB 3 102 1

10 12471 Chase Daniel DET 2 33 1

# … with 77 more rowsESPN

ESPN has interactive win probability charts for their games. They also go a step farther than FiveThirtyEight and the JSON is embedded into the HTML “bundle”. They also have a hidden API, but I’m going to first show an example of how to get the JSON from within the page itself.

Example End Function

get_espn_win_prob <- function(game_id){

raw_url <-glue::glue("https://www.espn.com/nfl/game?gameId={game_id}")

raw_html <- raw_url %>%

read_html()

raw_text <- raw_html %>%

html_nodes("script") %>%

.[23] %>%

html_text()

raw_json <- raw_text %>%

gsub(".*(\\[\\{)", "\\1", .) %>%

gsub("(\\}\\]).*", "\\1", .)

parsed_json <- jsonlite::parse_json(raw_json)

raw_df <- parsed_json %>%

enframe() %>%

rename(row_id = name) %>%

unnest_wider(value) %>%

unnest_wider(play) %>%

hoist(period, quarter = "number") %>%

unnest_wider(start) %>%

hoist(team, pos_team_id = "id") %>%

hoist(clock, clock = "displayValue") %>%

hoist(type, play_type = "text") %>%

select(-type) %>%

janitor::clean_names() %>%

mutate(game_id = game_id)

raw_df

}Get the data

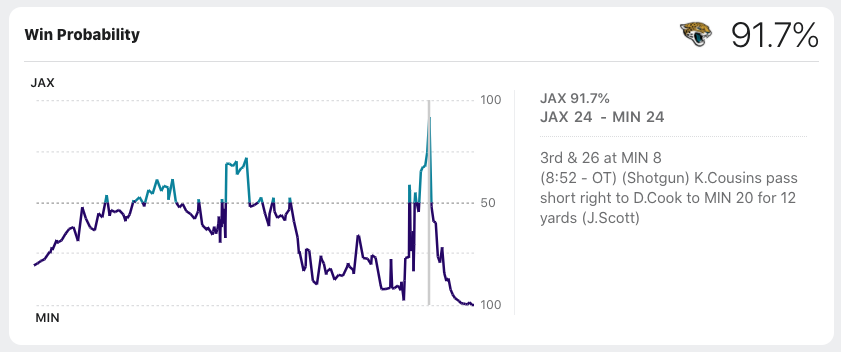

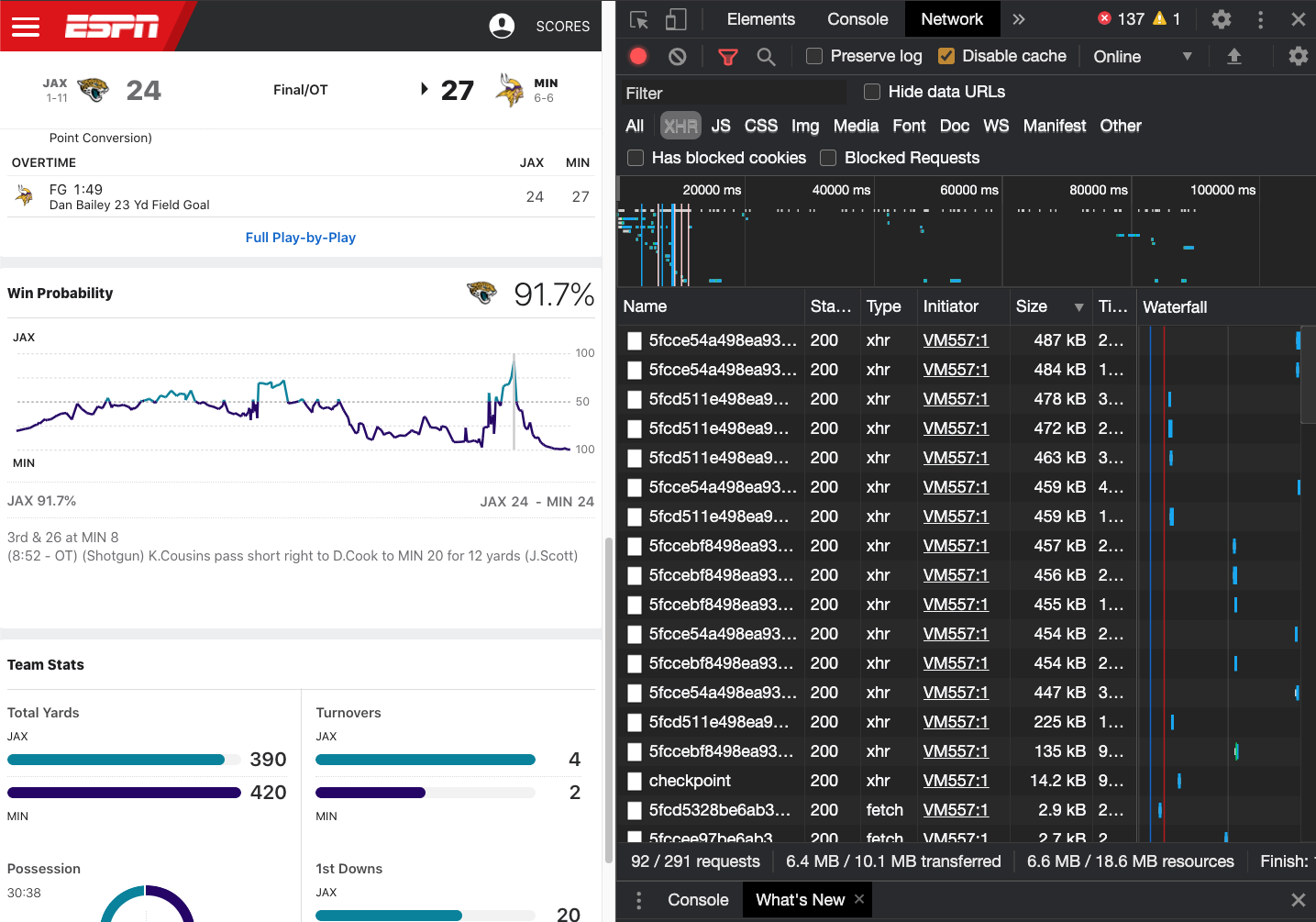

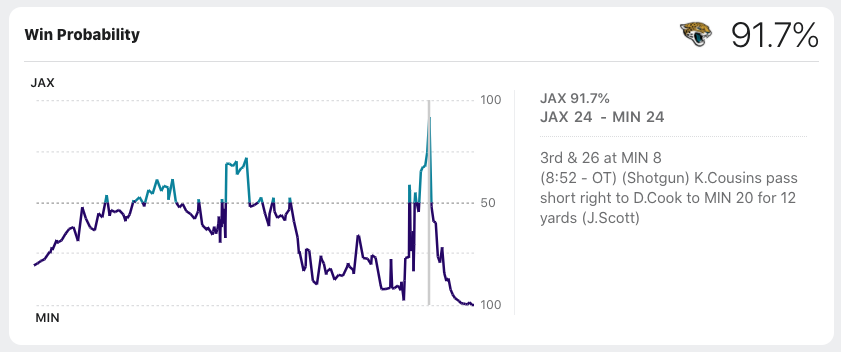

Let’s use an example from a pretty wild swing in Win Percentage from Week 13 of the 2020 NFL season. The Vikings and Jaguars went to overtime, with a lot of back and forth. Since there is an interactive data visualization, I’m assuming the JSON data is present there as well.

If we try our previous trick from FiveThirtyEight and the Inspect -> Network we get a total of… about 300 different requests! None of them appear big enough to be the “right” data. We’re expecting 4-5 Mb of data.

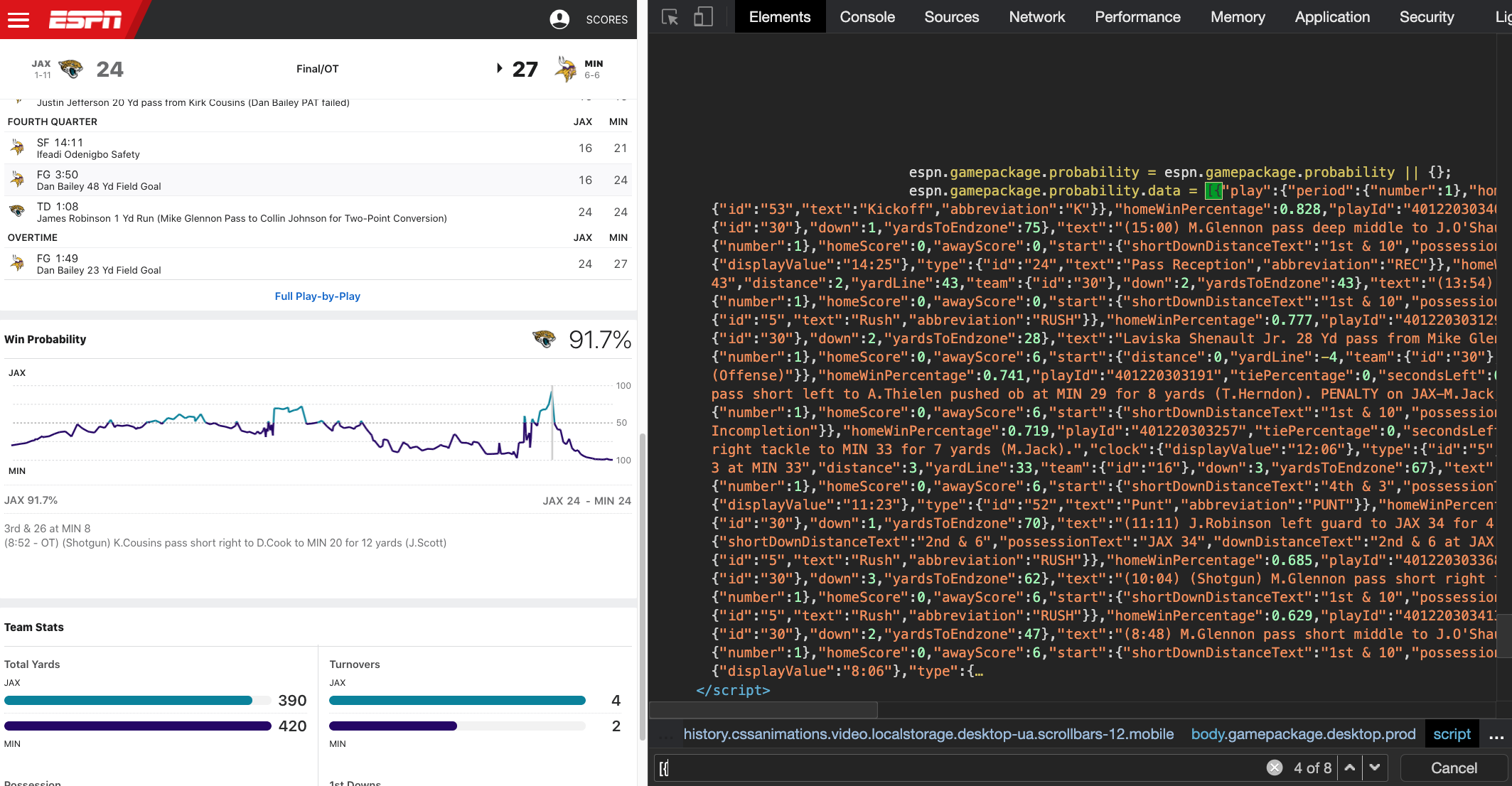

Another trick is to look for embedded JSON in the site itself. The basic representation of JSON is [{name: item}], so let’s try looking for [{ as the start of a JSON structure.

Inside the Google Chrome Dev tools we can search and find a few JSON files, including one inside some JavaScript called espn.gamepackage.probability! There’s a good amount of data there, but we need to extract it from the raw HTML. This JSON is inside a <script> object, so let’s parse the HTML and get script nodes.

espn_url <-glue::glue("https://www.espn.com/nfl/game?gameId=401220303")

raw_espn_html <- espn_url %>%

read_html()

raw_espn_html %>%

html_nodes("script") {xml_nodeset (27)}

[1] <script type="application/ld+json">\n\t{\n\t\t"@context": "https://schem ...

[2] <script type="text/javascript" src="https://dcf.espn.com/TWDC-DTCI/prod/ ...

[3] <script type="text/javascript">\n;(function(){\n\nfunction rc(a){for(var ...

[4] <script src="https://secure.espn.com/core/format/modules/head/i18n?editi ...

[5] <script src="https://a.espncdn.com/redesign/0.591.3/js/espn-head.js"></s ...

[6] <script>\n\t\t\tif (espn && espn.geoRedirect){\n\t\t\t\tespn.geoRedirect ...

[7] <script>\n\tvar espn = espn || {};\n\tespn.isOneSite = false;\n\tespn.bu ...

[8] <script src="https://a.espncdn.com/redesign/0.591.3/node_modules/espn-la ...

[9] <script type="text/javascript">\n\t(function () {\n\t\tvar featureGating ...

[10] <script>\n\t\twindow.googletag = window.googletag || {};\n\n\t\t(functio ...

[11] <script type="text/javascript">\n\tif( typeof s_omni === "undefined" ) w ...

[12] <script type="text/javascript" src="https://a.espncdn.com/prod/scripts/a ...

[13] <script>\n\t// Picture element HTML shim|v it for old IE (pairs with Pic ...

[14] <script type="text/javascript">\n\t\t\tvar abtestData = {};\n\t\t\t\n\t\ ...

[15] <script type="text/javascript">\n\t\tvar espn = espn || {};\n\t\tespn.na ...

[16] <script type="text/javascript">\n\n var __dataLayer = window.__dataLa ...

[17] <script>\n\tvar espn_ui = window.espn_ui || {};\n\tespn_ui.staticRef = " ...

[18] <script src="https://a.espncdn.com/redesign/0.591.3/js/espn-critical.js" ...

[19] <script type="text/javascript">\n\t\t\tvar espn = espn || {};\n\n\t\t\t/ ...

[20] <script type="text/javascript">jQuery.subscribe('espn.defer.end', functi ...

...Big oof. There’s 27 scripts here and just parsing through the start of the script as they are in XML is not helpful…So let’s get the raw text from each of these nodes and see if we can find the espn.gamepackage.probability element which we’re looking for!

raw_espn_text <- raw_espn_html %>%

html_nodes("script") %>%

html_text()

raw_espn_text %>%

str_which("espn.gamepackage.probability")[1] 23Ok! So we’re looking for the 23rd node, I’m going to hide the output inside an expandable tag, as it’s a long output!

example_embed_json <- raw_espn_html %>%

html_nodes("script") %>%

.[23] %>%

html_text()Example Embed JSON